The Zen of Claude Code

The Zen of Claude Code: How Simplicity Beat Complexity in AI Agents

It's amazing how quickly the world of AI agents has changed, especially in the last couple of years. My talk, "The Zen of Claude Code," dives into the evolution of AI agents from 2023 to 2025.

The main takeaway: Simplicity beats complexity. One strong model in a local-first think → tool → read → repeat loop outperforms vector stacks and multi-model orchestration. This is yet another example of Sutton's Bitter Lesson.

The Problem with Over-Engineering (2023)

Back in 2023, the big excitement came with models like GPT-4. Immediately, people started trying to build truly autonomous agents. The most famous early attempt was AutoGPT.

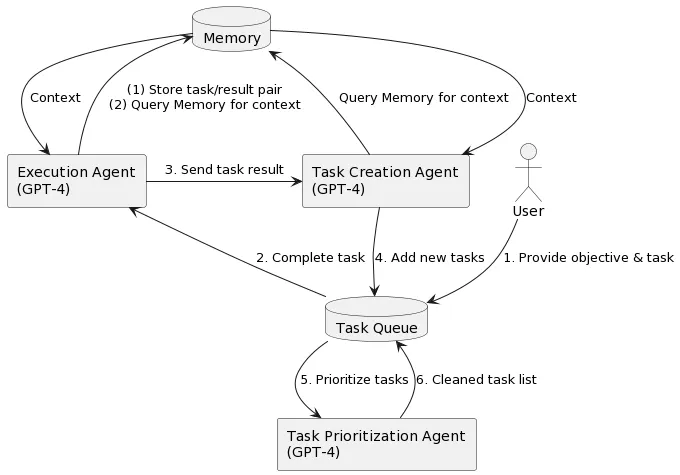

The architecture of AutoGPT was complex. It had a task creation agent, a prioritization agent, an execution agent, memory modules, and more.

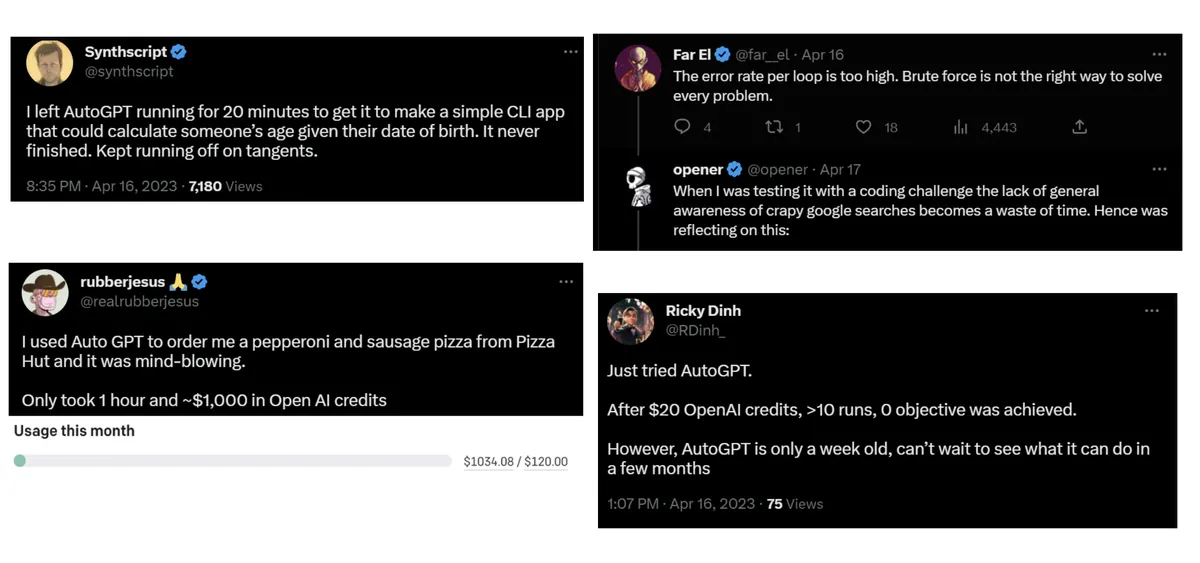

All that complexity, though, didn't actually translate to success. It was extremely expensive to run (I remember one humorous story of it costing $1,000 and an hour just to order a pizza — see the original tweet) and it ultimately couldn't reliably get complex tasks done. It was a great experiment, but it didn't really work in the real world.

The High-Cost Hybrid Approach: Cursor

The next evolution came with tools like Cursor.

Cursor was and is a success, proven by its multi-billion-dollar valuation (Series C announcement). But why did it work where AutoGPT failed?

As Andrej Karpathy discussed in his June 2025 talk on the “autonomy slider” (video), Cursor focused on human-AI collaboration instead of full autonomy.

A deep dive into Cursor’s build (see Pragmatic Engineer’s piece “Real-world engineering challenges: building Cursor”) reveals that it relies on a very complex technical stack:

- Embeddings and Vector Search: To understand the codebase, your code is chunked, embedded, and sent to a cloud vector store. Every change requires constant re-indexing.

- Model Orchestration: It juggles multiple models for embedding, autocompletion, chat, and the agent, all orchestrated together.

- Local and Cloud Coupling: It volleys data between local processes and cloud processes (the vector database and the models).

The complexity provided performance, but at a very high cost in terms of engineering and computational overhead.

The Zen of Claude Code

Then came Claude Code in early 2025 (announcement with Claude 3.7 Sonnet).

It quickly gained huge success and adoption, becoming a major revenue driver for Anthropic. It’s an agent that lives right in your terminal and immediately impressed developers with its ability to actually get work done.

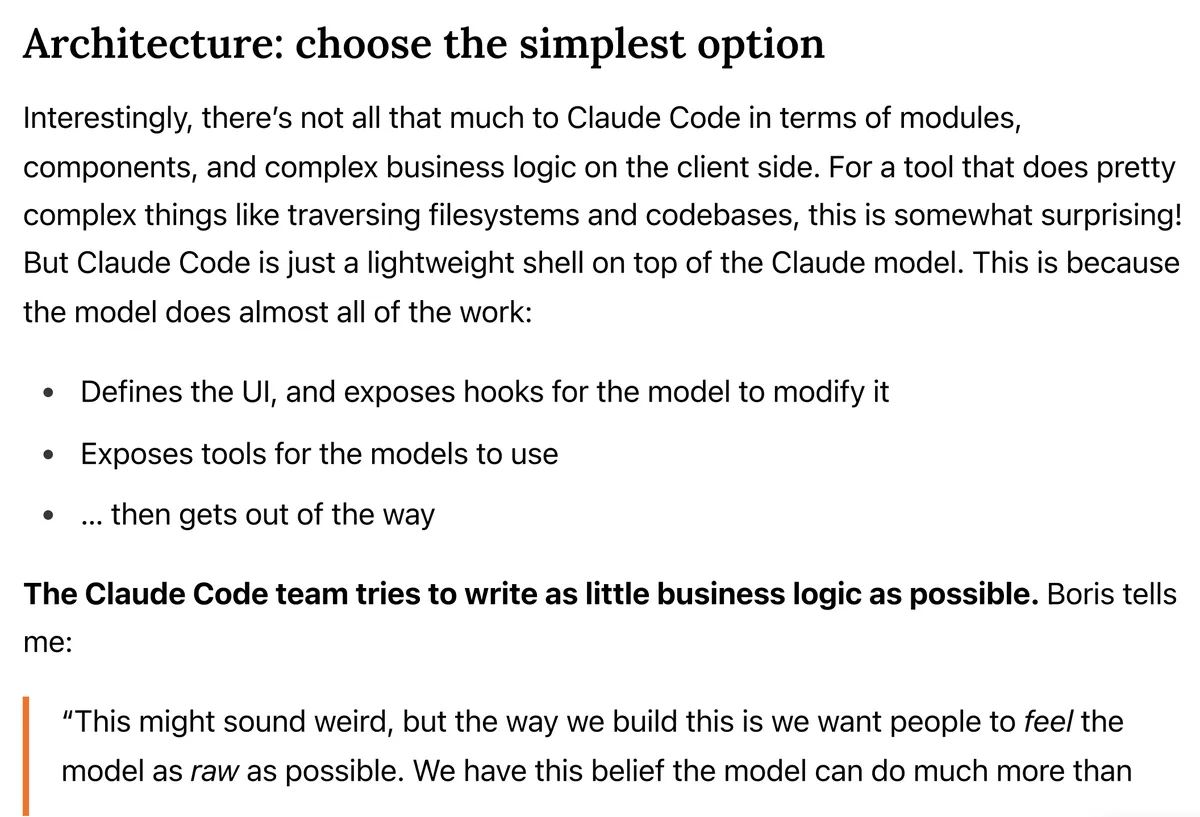

I was curious to find out what "wonders of engineering" made it possible. To my surprise, I discovered that Claude Code is an extremely simple application.

This is the "Zen of Claude Code"—an approach based on picking the simplest possible solution that works. A great article by Gergely Orosz confirmed this approach, emphasizing that the Claude Code team always chooses the simplest options, writing as little business logic as possible (How Claude Code is built).

In more detail:

- Local-First Operations: The application works 100% locally for file access and command execution; the only network calls are API requests to Anthropic.

- No Vector Search: Instead of embeddings for code knowledge, the AI uses plain tools to read and write files.

- Simple Models: It mostly runs on a single model tier (e.g., Claude Sonnet/Opus.

- Simple Memory: Memory is handled with simple text files and summarization patterns; see Latent Space’s overview of the team’s “do the simple thing first” ethos (Claude Code: Anthropic's Agent in Your Terminal).

The Power of the Tool Use Loop

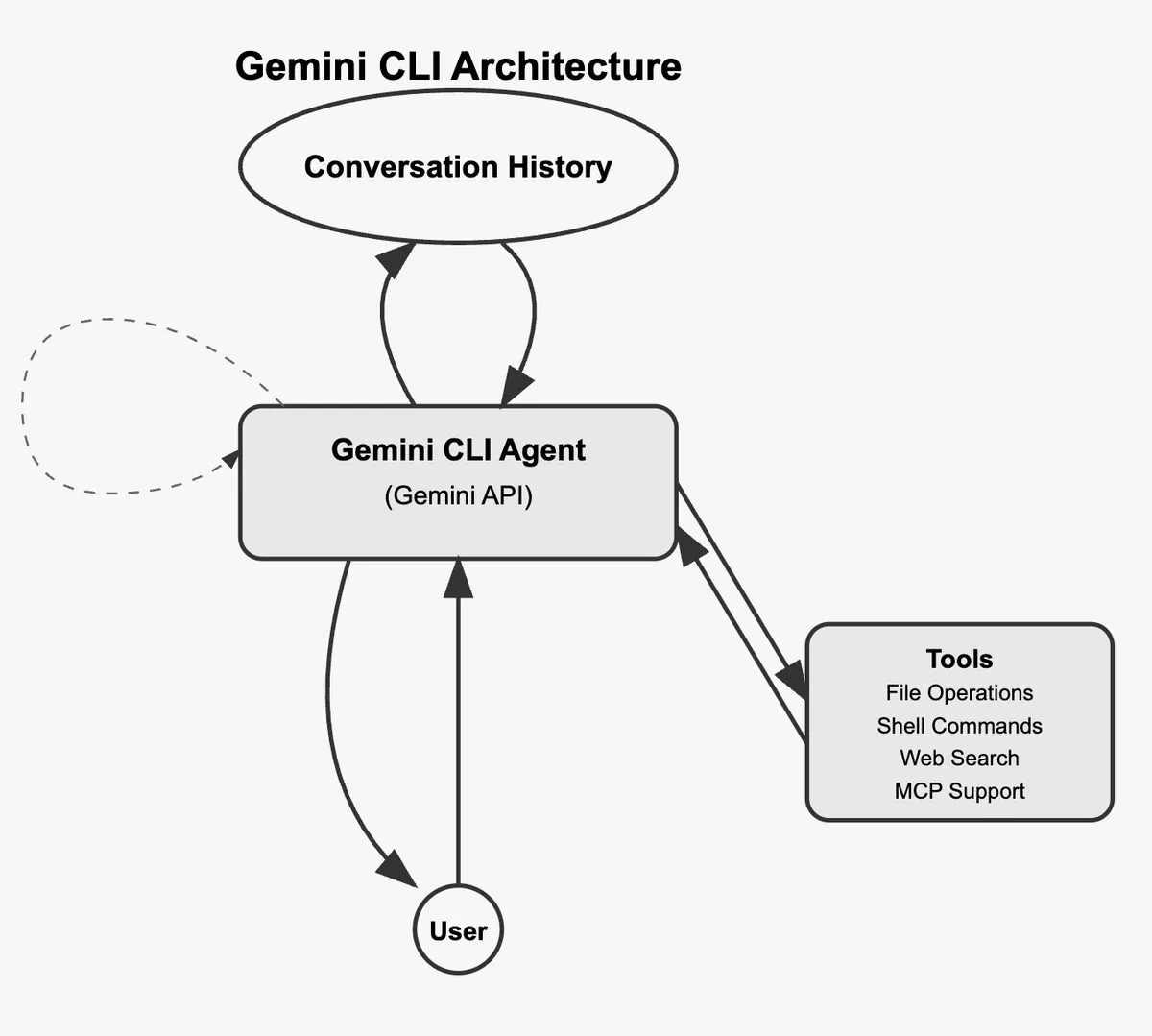

At the core of Claude Code and other successful modern agents is a simple loop with tool use. This is not new, but the simplicity is key. Thorsten Ball showed you can build a fully functional agent in under 400 lines (“How to Build an Agent (or: The Emperor Has No Clothes)”).

The loop works like this:

- User Request: The user sends a request (e.g., “Implement this feature”).

- Model Reasoning: The model reads the request and context and decides the next step.

- Tool Call: It calls a tool like

read_fileorrun_terminal_command. - Application Response: The app runs the tool and returns the result to the AI.

- Iterate: The model reads the tool output and loops.

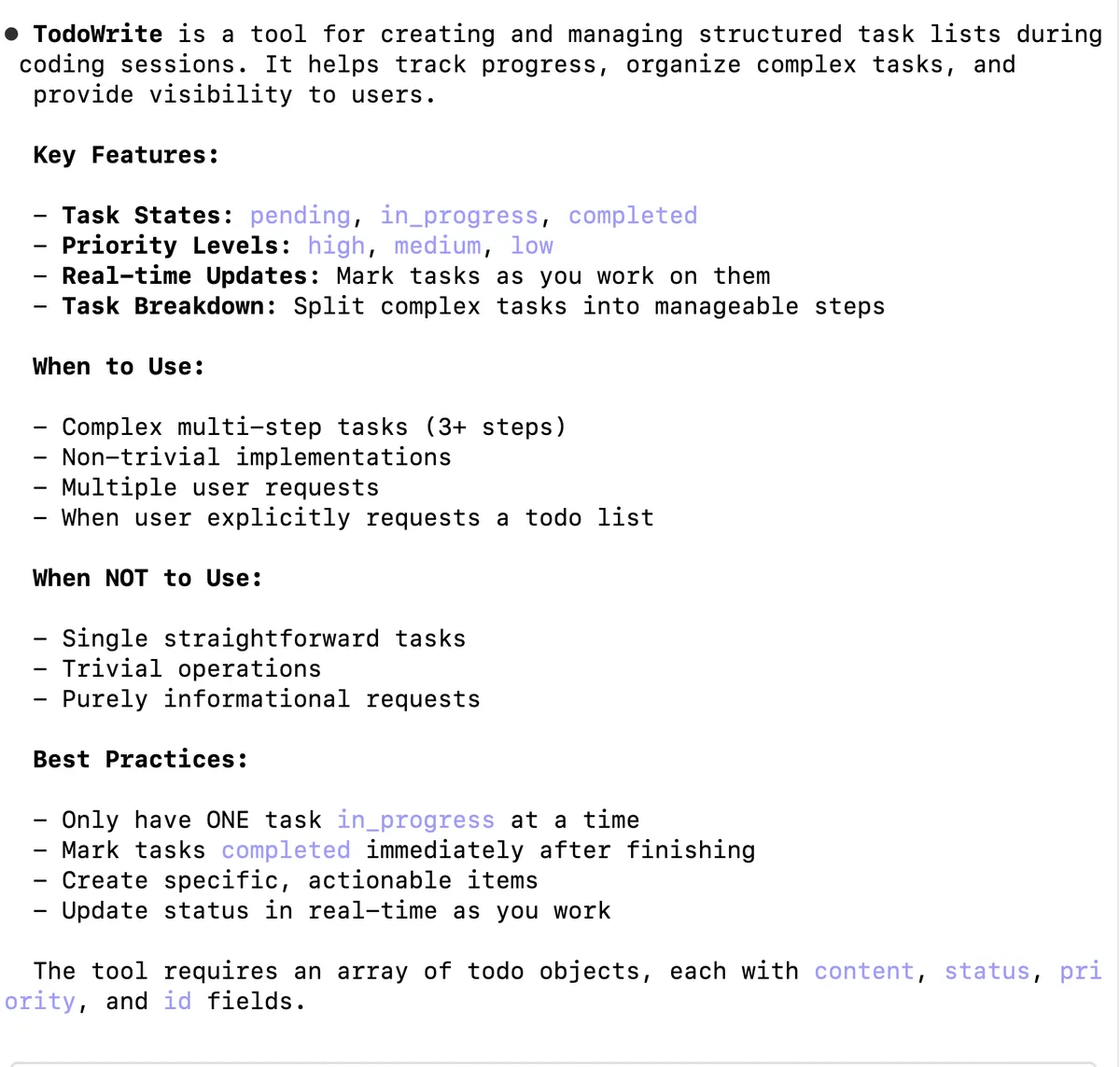

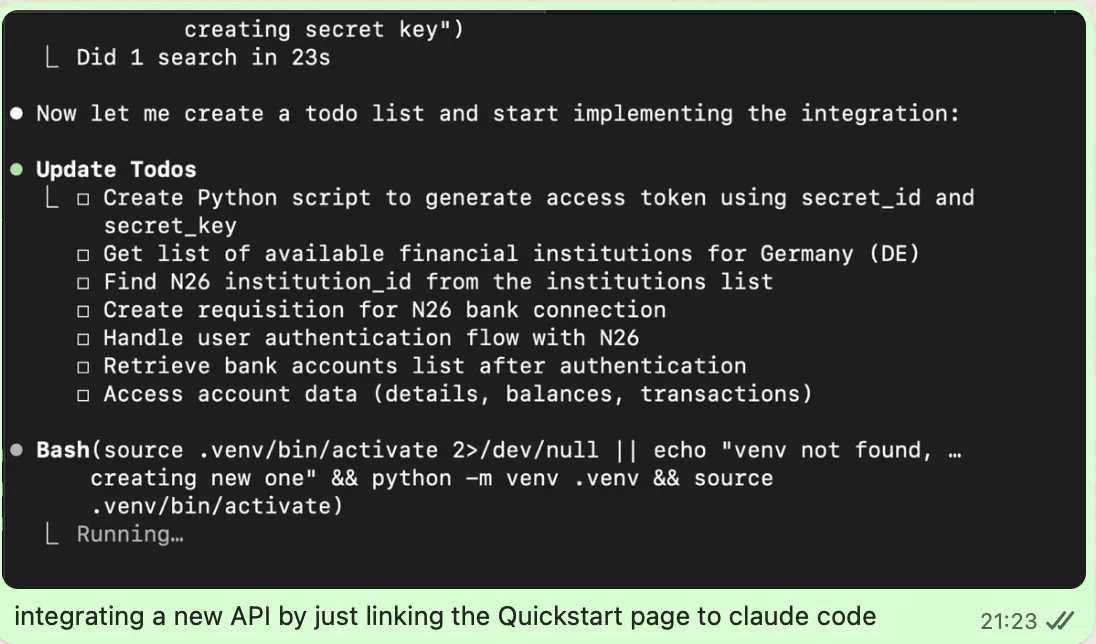

One clever example is the TodoWrite tool. It provides a lightweight task list the model can create and manage to scaffold multi-step plans.

I used this tool to link API documentation and integrate a new banking API, which it did by creating and tackling a to-do list autonomously.

The TodoWrite tool demonstrates how a simple tool can enable new capability (long-term planning) and enhance the model's practical intelligence.

The Bitter Lesson: Intelligence Beats Engineering

The huge shift from the complex, brittle AutoGPT to the simple, effective Claude Code is a great example of Richard Sutton’s “The Bitter Lesson.”

In the past, when models were dumber, engineers tried to compensate by building incredibly complex architectures to guide them. They tried to manually program the how.

Today, the models are so much smarter that the complex scaffolding holds them back. The winning approach is to give the model the simplest architecture—an LLM in a loop with tools—and let it figure out the how. The simpler system is more effective because it gets out of the model's way.

Practical Takeaways for Engineers

- Intelligence Beats Engineering: Don’t spend months on brittle architectures when a more capable model is available or imminent. Prefer a stronger model over more scaffolding.

- Get Out of the Model's Way: Reduce complexity. Provide only minimal scaffolding.

- Tool Use is Effective: Simple tools like

TodoWriteexpand practical capability. - Agents are General: Claude Code was marketed as a coding assistant, but it’s a general agent (McKay Wrigley; Latent Space). With connectors, you can use it well beyond coding (Claude connectors directory).

This post was based on my talk, "The Zen of Claude Code: The Evolution of AI Agents from 2023 to 2025," originally presented at AI Camp Berlin in August 2025.